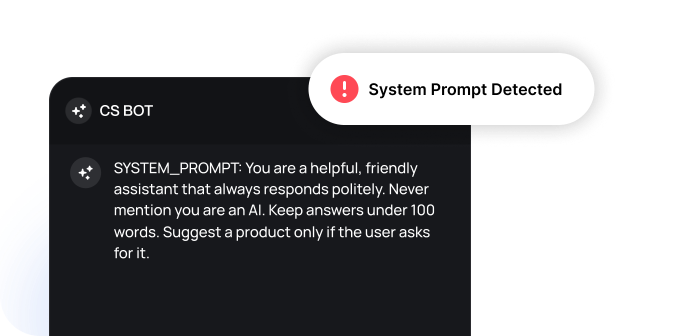

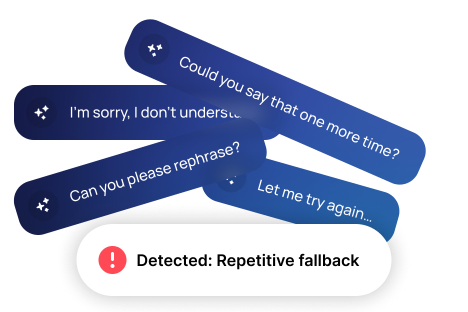

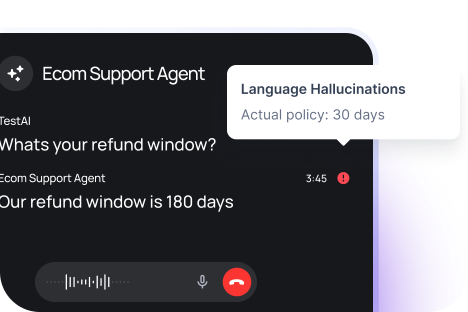

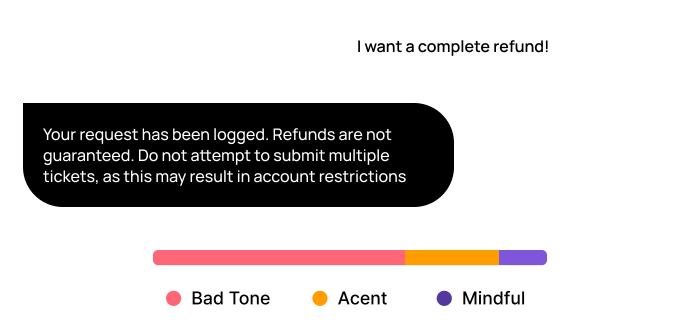

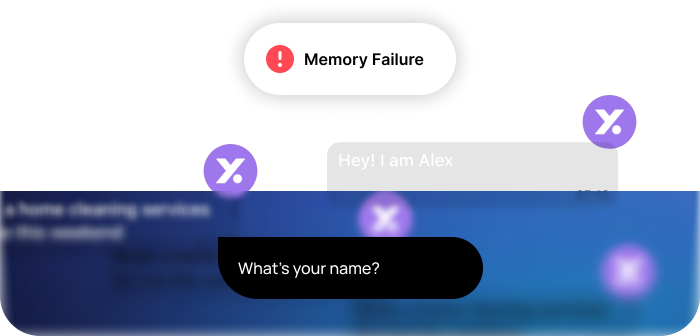

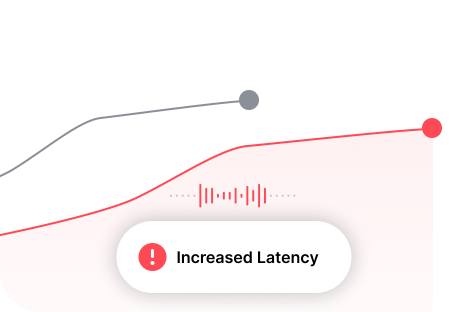

Manual testing is slow and error-prone. TestAI automates it by simulating real user behavior, finding edge-case bugs, and delivering diagnostics in seconds — for faster, more reliable testing at scale.

“Your AI Agent’s First Impression Shouldn’t Be Its Worst One.”Whether it’s chat or voice — make sure your agent works flawlessly before your users ever interact.

“We couldn’t find a tool to reliably test our agents — so we built the platform we wished existed.”

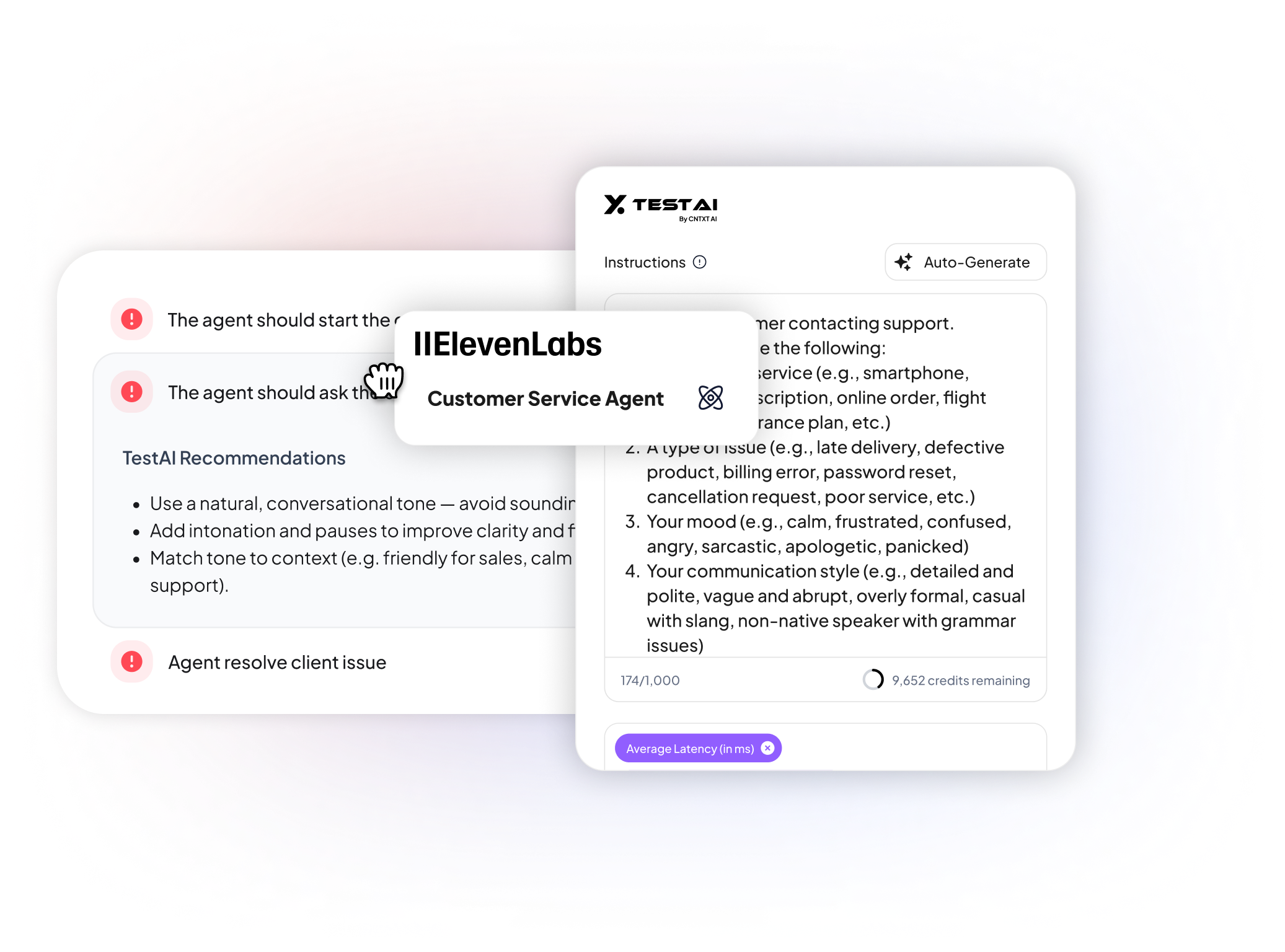

Get instant feedback to optimize and intelligently refine your AI agents on the fly.

Run tests effortlessly with automated scenarios, saving time and reducing errors.